All articles

Inside the Shift Toward Internal Data Governance As Global AI Regulation Fragments

As global AI rules diverge, DataRep Non-Executive Director Onur Korucu explains why effective governance now depends on understanding data flows and infrastructure.

Key Points

AI accountability accelerates while governance lags behind real data flows, infrastructure, and system behavior, creating hidden risk that policy frameworks alone cannot control.

Onur Korucu, a Non-Executive Director at DataRep and advisor on AI governance, explains why static rules fail when AI risk scales faster than human oversight.

Organizations shift toward internal, data-driven governance models that embed continuous monitoring, system visibility, and shared accountability across teams.

You cannot manage AI the old way. It’s dynamic, it evolves every day, and the gap between legal intent and technological reality is where most of the risk actually sits.

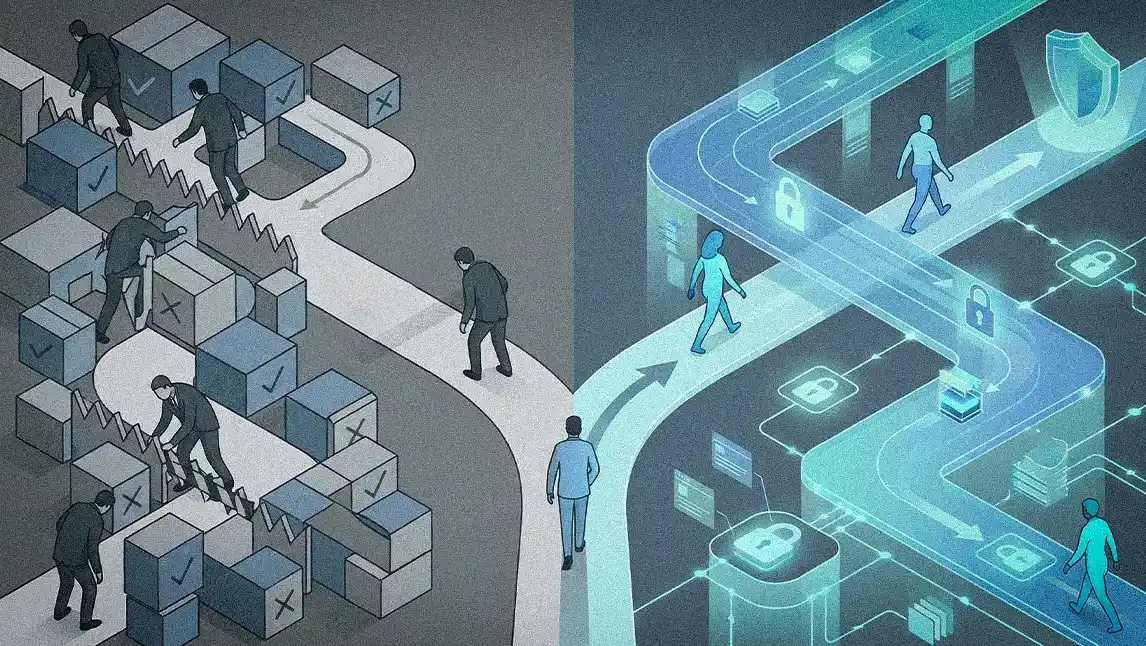

AI is now embedded in production systems and data workflows, and accountability is moving faster than governance can adapt. Many organizations track regulations closely but lack visibility into how data, models, and infrastructure actually operate in practice. The result is a growing gap between documented governance and real control, leaving critical risks hidden inside everyday systems.

Onur Korucu is a Non-Executive Director at DataRep, a data protection firm, and an advisory board member at Dominican University of California and at the International Association of Privacy Professionals (IAPP), where her work centers on data protection, cybersecurity, and AI governance. Honored by Business Post as one of the Top 100 Most Powerful People in Tech in Ireland, Korucu was awarded the 2024 IAPP Privacy Vanguard Award for EMEA and is a globally recognized TEDx Speaker. She has held senior leadership roles at Microsoft, PwC, and Grant Thornton, and was named the Women in Tech Network’s 2025 Cybersecurity Leader of the Year. She argues that traditional approaches to governance are no longer fit for purpose.

"You cannot manage AI the old way. It’s dynamic, it evolves every day, and the gap between legal intent and technological reality is where most of the risk actually sits," says Korucu. Her point cuts to the core challenge facing governance teams. AI expands risk faster than static frameworks can capture it, turning what were once manageable lists into constantly shifting systems problems.

More risks, more problems: “The old IT governance mindset was to write down your top five risks in a document. But when you’re classifying AI risks at a major tech company, you’re no longer dealing with a short list,” says Korucu. The scale, volume, and velocity of AI risk quickly overwhelm manual processes, forcing governance to shift toward continuous monitoring and machine-driven oversight.

Governance in motion: "You cannot govern AI by writing rules for software alone. It runs on infrastructure, hardware, and constantly moving data, and when governance focuses on only one layer, risk simply shifts elsewhere," she continues. "That's why governance has to be living and operational, built on dynamic risk assessment, continuous monitoring, and privacy and security by design from the very beginning." Without technical fluency at the leadership level, organizations often mistake policy artifacts for control while risk quietly accumulates across systems and data flows.

These internal challenges are amplified by a fragmented global regulatory landscape where political priorities increasingly shape AI outcomes. One-size-fits-all compliance strategies no longer hold as rules shift, stall, or disappear entirely. Korucu points to the EU’s withdrawn AI Liability Directive as a clear signal of how unstable accountability has become. As jurisdictions diverge, universal standards are losing traction, pushing organizations to build internal governance systems that give them control over data and risk even as external rules remain in flux.

The DIY imperative: Traditional security and governance standards were built for a more predictable era. As AI becomes more complex and embedded across organizations, centralized frameworks no longer hold on their own. Companies are increasingly forced to design governance models that reflect their specific systems, risks, and environments. "We cannot centralize everything. Different sectors and companies need their own frameworks," she says.

Unicorn hunting: Some leaders still search for a single expert who can master every dimension of AI, from system design to legal and regulatory risk. Korucu is blunt about that expectation. "If someone tells you they understand AI from both the technical and legal sides, that’s a lie."

AI risk has moved out of the abstract and into enforcement. Actions like the UK’s criminalization of non-consensual deepfakes, alongside rising scrutiny of AI systems, signal that accountability is no longer optional. The question facing leaders is no longer whether AI should be governed, but where governance should begin. Korucu is clear that the problem is often misframed. AI does not invent behavior on its own. It reflects the data, assumptions, and decisions embedded in the systems that feed it, and poorly governed inputs only magnify existing bias and risk.

That is why she argues the real challenge is data governance, not AI control. When organizations fail to understand what data they are feeding into AI systems, bias and risk are inevitable outcomes rather than anomalies. "If you have no idea what is going on in your own home, you cannot understand what is going on outside your home," she concludes.