All articles

How Leaders Are Re-Mapping Security Boundaries As Companies Trade Proprietary Data For AI Gains

Sohail Iqbal, Chief Information Security Officer at Veracode, explains how intentional data sharing for AI value is dismantling perimeter security and redefining the human role as governance over trust.

Key Points

Companies intentionally share proprietary data with AI platforms to gain efficiency and automation, breaking the assumption that security can rely on fixed enterprise boundaries.

Sohail Iqbal, Chief Information Security Officer at Veracode, describes how this shift renders the traditional security perimeter obsolete and reframes risk as a data ownership problem.

Security adapts by following the data through tracking, control, and revocation, while humans move from manual execution to oversight and governance at machine scale.

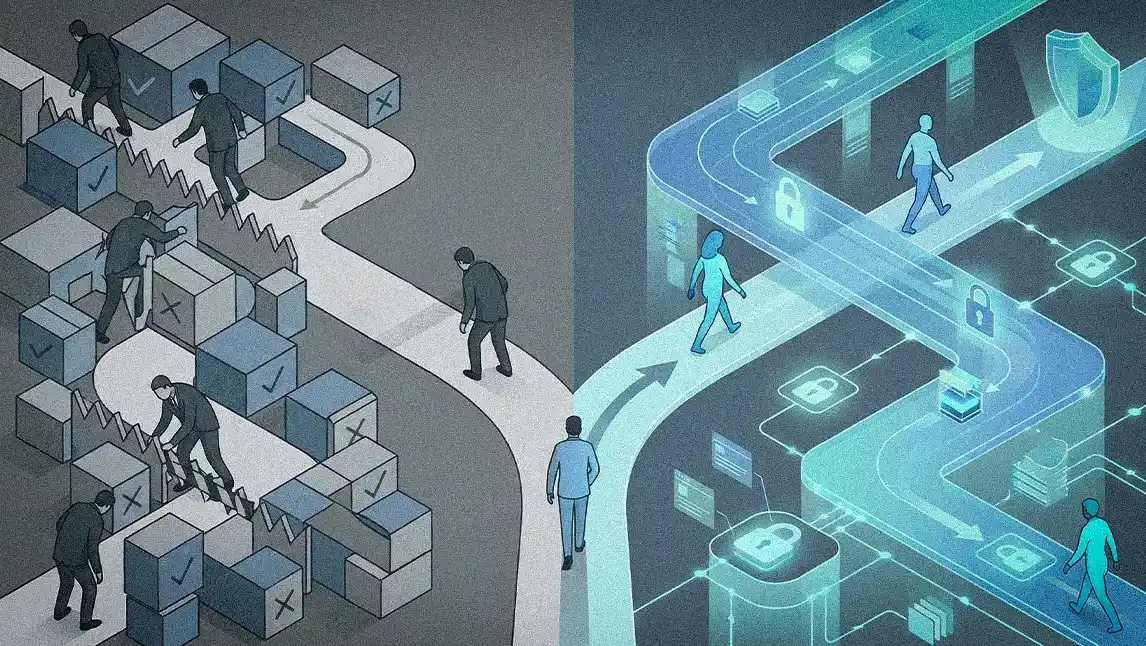

Your perimeter is no longer a boundary around your company assets. The boundary is wherever your data goes, whether it lives inside your ecosystem or in a third-party ecosystem that is learning from it.

To capture the value of AI, a growing number of organizations are deliberately pushing proprietary data to third-party platforms. The intentional business strategy moves beyond managing accidental data leaks and directly challenges the foundations of perimeter-based enterprise security, forcing a re-evaluation of where a company’s true boundaries now lie.

Sohail Iqbal is a senior technology strategist at the center of this shift. As Chief Information Security Officer at Veracode, with prior security leadership roles at CarGurus and Dow Jones / WSJ, he brings deep experience managing risk at scale. Also serving as a member for the Rutgers Cybersecurity Council, Iqbal’s perspective is that the industry must move beyond its legacy mindset to embrace a new security mandate.

"Your perimeter is no longer a boundary around your company assets. The boundary is wherever your data goes, whether it lives inside your ecosystem or in a third-party ecosystem that is learning from it," says Iqbal. For decades, the standard model was a layered approach built on the assumption that a company’s most valuable data remained securely inside its own environment. Today, he says, data is often exchanged for a competitive edge.

The willing trade: It's a move that reflects a deliberate exchange rather than a loss of control. "Companies let data go out willfully now to these third parties. They’re okay with sharing that data with them in the hope that, by learning from the data, they'll be able to deliver better value, better efficiency, better automation," explains Iqbal.

Recording in progress: It's clearly visible in everyday workflows. Internal meetings are routinely recorded and analyzed by third-party tools, and many organizations now accept that the AI-generated value behind the scenes, such as transcripts, summaries, and action items, justifies the exchange. Governed by updated privacy and security agreements, this kind of transaction reflects a fundamentally different posture toward data sharing. "If you go back five years, would you have allowed someone to record your call or conversation? Probably not," notes Iqbal. "Today, you’re comfortable with Zoom recording it. What was once strictly proprietary is now something organizations knowingly allow a third party to access."

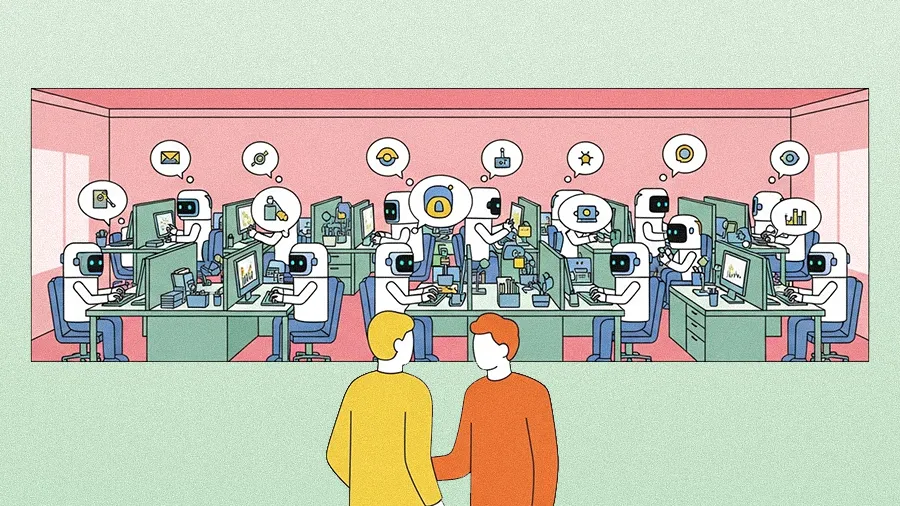

The core challenge is that AI operates at a scale and velocity that legacy, human-centric security processes were not built to handle. Familiar threats like unauthorized access are now amplified. The emerging field of data security is attracting massive investment, but it is a problem that money alone can't solve.

Accelerated risk: "The risks are still the same: unauthorized access, authentication, authorization. But they're on the steroids of AI. And what's fundamentally changing them is really the scale and the velocity."

Fighting fire with fire: The primary way to manage risk at a machine-driven scale is with a machine-centric solution. To combat the vulnerabilities and flaws in code generated by AI, for example, organizations must use AI to remediate it. Expecting old-world manual review processes to keep pace is functionally impossible, says Iqbal. "If you're utilizing an AI to write a code, you better build your proficiency or leverage a platform which helps you to remediate that code also utilizing an AI."

Data on a leash: As the old perimeter becomes less relevant and data flows more widely, the focus for many security leaders is shifting. The priority moves from simple prevention to a new framework built on a specific set of actions, so that security can follow the data wherever it goes. "You still need to be able to track, trace, revoke, and protect your data, even when it no longer lives inside your own environment and is being shared with third parties that are using AI to learn from it," Iqbal insists.

A new mandate of this kind calls for more than incremental updates to existing security practices. It demands a rethinking of how methodologies like DevSecOps and shift-left operate in a machine-driven environment. As AI increasingly handles execution at scale, the role of the security professional shifts toward oversight, governance, and trust stewardship in a data-centric ecosystem.

"It’s a fundamental philosophical shift. It’s a technological shift," Iqbal concludes. "We have to embrace it and figure out how humans move into oversight and governance, rather than serving as the executioner."