All articles

How Lack Of Observability Undermines Enterprise AI Value As Autonomous Agents Expand

Rosa Chang Claro, Director of Strategic Engagement at Dynatrace, positions observability as the control layer enterprises need to trust AI agents, prove value, and govern risk at scale.

Key Points

AI agents scale faster than enterprise visibility, leaving leaders unable to verify accuracy, measure impact, or detect risk as decisions move downstream without oversight.

Rosa Chang Claro, Director of Strategic Engagement at Dynatrace, frames observability as the missing control layer as AI systems become more autonomous and human judgment shifts upstream.

She outlines a solution built on clean data, cloud readiness, pipeline-first execution, and GenAI observability to prioritize value, move projects into production, and govern AI at scale.

Observability is becoming essential to understanding how agents perform and how much real value AI delivers to the organization.

AI agents are starting to make decisions before leaders fully understand how they behave. As free, default tools spread across the enterprise, activity is scaling faster than visibility. Models respond, agents act, and outputs move downstream with little clarity into performance, accuracy, or impact. When organizations cannot see what their AI systems are doing in real time, value becomes difficult to prove and risk becomes easy to miss. This is the observability dilemma taking shape inside modern enterprises.

That’s why we spoke with Rosa Chang Claro, a multinational business strategist with over 15 years of experience. As Director of Strategic Engagement at the observability firm Dynatrace, and with a track record driving transformation at Microsoft, SAP, and GE, she has a ground-level view of what’s at stake. Chang Claro sees this moment as a turning point. As AI systems become more autonomous, observability moves from a technical nice-to-have to a core business requirement for trust, control, and value.

"Observability is becoming essential to understanding how agents perform and how much real value AI delivers to the organization. We need visibility into different AI scenarios to know whether the information is correct, whether hallucinations are occurring, or whether something is not working as it should," says Chang Claro. But the push for widespread AI adoption has a potential downside.

Prompt, paste, repeat: The convenience of these tools can foster a culture that erodes our ability to question and validate AI-generated outputs, she warns. "These tools can make us lazy, encouraging us to simply prompt, copy, paste, and be done. While they can help to accelerate insights, it doesn't replace our critical thinking that drives innovation, resilience, and our final decision-making process based on data validation, context, judgement, and how current the information is across all its different sources."

The empathy edge: The implication for leaders is that as AI automates routine cognitive tasks, the value of human capital is shifting toward skills like high-level analysis and collaborative problem-solving. "Having a unique point of view is what creates differentiation in the age of AI," Chang Claro continues. "That's why soft skills like empathy, human interaction, and face-to-face contact are becoming more valuable."

Stuck at 10%: A sound technical foundation is an essential prerequisite for effective human oversight. She points to its absence as a common reason why so many AI initiatives fail, citing the age-old principle of "garbage in, garbage out." She said leaders must first get their data architecture right. "While 90% of organizations are trying to implement AI, only 10% have gone live. Imagine the inefficiency and cost that comes from a poor understanding of data quality or the use case you are trying to solve. Are we really understanding what the agent is going to do and how much value it will bring?"

To steer clear of the 90%, Chang Claro offers a clear sequence of steps. It begins with migrating to the cloud to accelerate innovation and improving data quality through cleansing, source verification, and data governance. With this foundation in place, organizations can then identify and prioritize use cases in AI that align with their strategic goals. These scenarios should deliver value across four dimensions: revenue generation, risk mitigation, cost optimization, and business transformation.

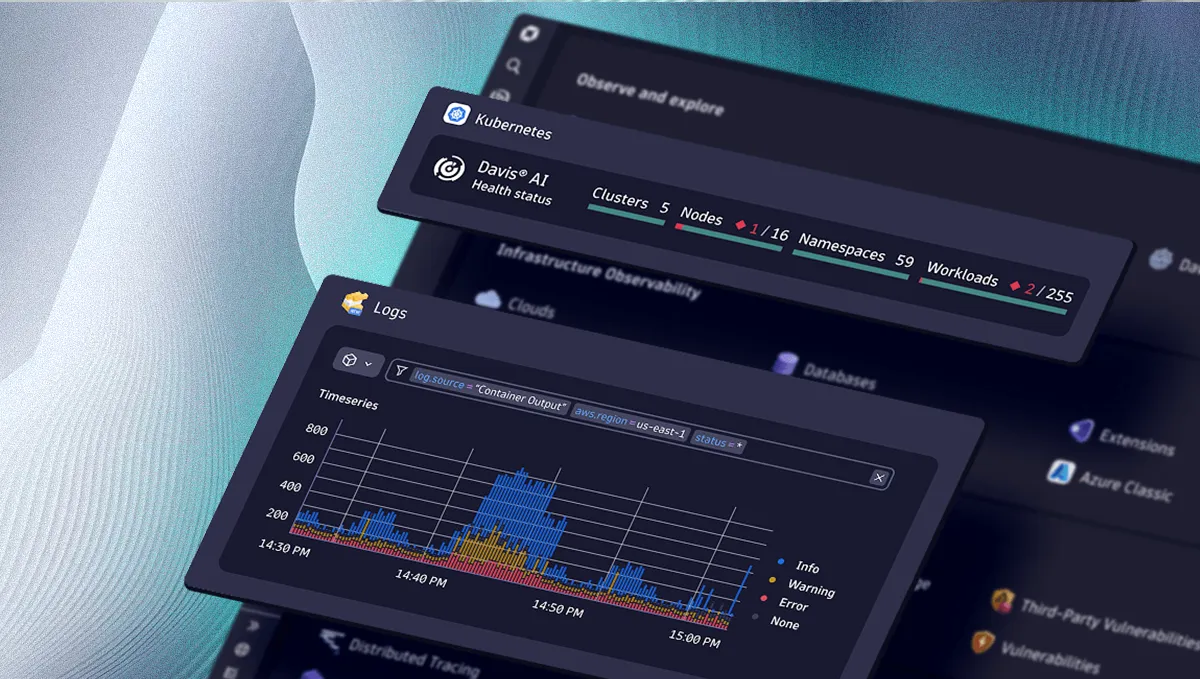

Data as the differentiator: "It all comes down to data," says Chang Claro. "That means valuing every type of data an organization has. This includes structured and unstructured data, logs, metrics, and traces—semi-structured data. In the coming years, competitive differentiation will be determined by real-time reliability, accuracy, quality, and the correct interpretation of that data. This foundation enables an effective observability strategy and successful AI implementation, and accelerates business insights to optimize the decision-making process."

Looking ahead, Chang Claro sees agentic AI accelerating both scale and consequence. As agents take on more autonomy, understanding outcomes is no longer enough. Leaders will need deeper analytics to understand behavior, context, decision paths, and downstream impact. That expansion also introduces a new responsibility. Sustainability becomes a fifth pillar of AI value, requiring clear accountability for energy, water, and compute consumption through stronger governance. Therefore, by embedding sustainability into observability practices—tracking resource usage alongside performance metrics—businesses can achieve both operational excellence and responsible growth.

In that future, observability becomes the connective tissue. "It’s essential to have deep observability into data," Chang Claro concludes. "Data analytics is truly essential to understand how the systems are performing." Without that visibility, organizations cannot verify accuracy, measure value, or govern AI responsibly at scale. As agents proliferate, the ability to observe clearly becomes the difference between controlled progress and unmanaged complexity.

The views and opinions expressed are those of Rosa Chang Claro and do not represent the official policy or position of any organization.