All articles

As Internal AI Outpaces Traditional Governance, DPO Role Evolves into Data Guardian

Nanoprecise's Faizan Wani explains the DPO's shift to Data Guardian, the risk of internal AI use, and why governance needs to be built into AI architecture from the start.

Key Points

The biggest governance risk in the AI era comes from the unintended repurposing of data by internal AI systems, a challenge traditional, reactive compliance models are not equipped to handle.

Faizan Wani, DPO at Nanoprecise Sci Corp, asserts that the DPO role must evolve into a "Data Guardian" who leads system design, shifting governance from a compliance checklist to an architectural mandate.

He advocates for privacy by design and a shift-left methodology, embedding automated safeguards, threat modeling for internal risks, and data segmentation into the earliest stages of development.

Governance has to be integrated within the design principles of your organization. Once you enact privacy by design, rather than treating it as a feature or an option, it helps greatly in building that ecosystem around the company.

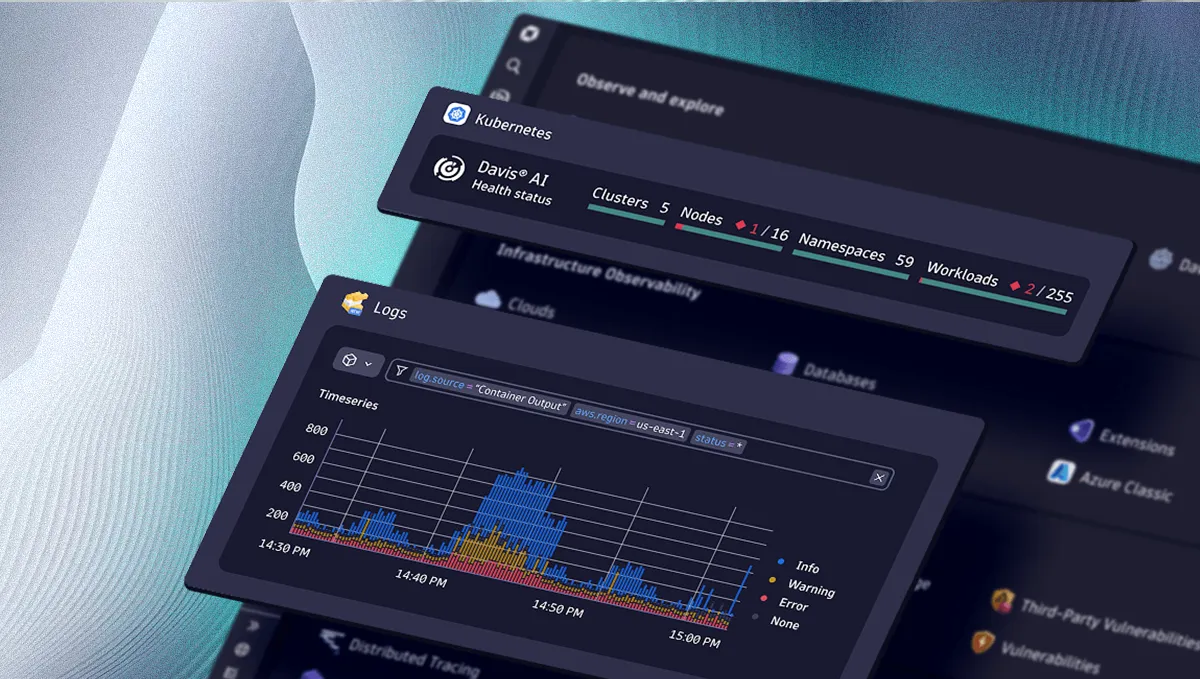

Enterprise governance has spent years hardening the perimeter against external attackers. Now, the primary risk sits inside the system itself. Internal AI tools are repurposing data at scale and turning small design decisions into systemic exposure, while traditional governance models can’t keep up with technology that processes vast, contextual data streams in real time. The breakdown happens at the architectural level, where the rules still exist, but the systems enforcing them were never built for AI.

To understand the systems needed for modern data protection, we spoke with Faizan Wani, Senior Manager of IT, Cyber Operations and Data Protection at predictive maintenance solution provider Nanoprecise Sci Corp. With more than six years of experience designing and operating security programs in AI-driven, industrial environments, he sees a widening gap between how data is governed and how AI systems actually behave.

"The biggest challenge in AI right now is not malicious intent. The biggest challenge is unintended data use by internal AI systems," says Wani. That internal risk emerges because many governance models weren't built for the speed and scale of modern technology. A reactive, check-the-box approach rooted in compliance and focused on basic AI legal issues can’t keep pace with systems ingesting thousands of data streams at a time. The challenge is compounded by the unique nature of industrial data. As Wani explains, IoT data is deeply contextual, revealing sensitive details about operations and supply chains. That elevates the risk beyond regulatory fines and into the strategic heart of the business, where strong governance can become a catalyst for innovation.

Architects of trust: The new environment demands an evolution of the Data Protection Officer into a “Data Guardian” who helps lead system design from its inception. The modern DPO’s expanding responsibilities place them at the heart of architecture, where their understanding of the human layer helps the organization understand the full lifecycle of its data, from the principles guiding its collection to the path it travels through the company. "Earlier, DPOs would not be added into the system design phase. But nowadays, DPOs are the ones who lead system design and system architecture because DPOs are attached to data. So data actually drives the system architecture."

Shift left: Success in the evolving DPO role relies on a new playbook, starting with a core philosophy of privacy by design, which establishes data protection as a non-negotiable architectural requirement. That philosophy, a key part of board-level oversight, comes to life through a shift-left methodology, Wani explains, embedding governance at the earliest stages of development.

Design, don't patch: A proactive mindset can be as simple as scheduling brief, regular check-ins with leaders from product, support, and the C-suite to understand new initiatives long before implementation. "Governance has to be integrated within the design principles of your organization. Once you enact privacy by design, rather than treating it as a feature or an option, it helps greatly in building that ecosystem around the company," he advises.

In practice, that philosophy translates to embedding governance into daily workflows. At the earliest stage of product discussion, Wani’s team gets involved by reviewing Jira story points. From there, they threat model for both external malicious intent and the internal risk of unintended data use. The proactive oversight leads to architectural solutions like data segmentation to create separate pipelines for different data types and implementing automated safeguards. "You don't want to jumble up different types of data in one location. You need to ensure that a lifecycle policy is attached and all of this needs to be automated. You can't have a compliance program that's driven manually," he stresses.

To make the concept tangible, Wani points to MFA as an example. The system enforces the policy, removing the option for an employee to bypass it. It's the principle of enforcement by design in action. For him, that same principle of professional-grade AI security should be applied to the governance of AI. "Telling people to do something means they will find a bypass. That’s why security principles must be built into the system by design, so governance is enforced from the beginning, not added at the end," Wani concludes.