All articles

How One Pharma Engineer Stabilizes Multi-Agent AI Systems with Auditable, Pipeline-First Strategies

Ronitt Mehra, AI Engineer at Amneal Pharmaceuticals, shows how a pipeline-first approach and autonomous remediation framework can turn fragile multiagent AI into resilient production systems.

Key Points

Enterprises face growing difficulty moving AI from concept to production as multi-agent systems fail in ways that are hard to detect and repair.

Ronitt Mehra, AI Engineer at Amneal Pharmaceuticals, presents a pipeline-first approach that treats scalability, performance, and infrastructure design as core requirements from day one.

He shows how autonomous remediation tools like CTA-ACT and parallel cross-functional workflows create AI systems that stay resilient, auditable, and trustworthy in production.

The goal is not just to create a POC, but to build it from the start with parameters like scalability, performance, latency, and throughput in mind.

Scaling AI is no longer a model problem. It's a pipeline problem. Enterprises need systems that stay reliable, monitor themselves, and recover without manual intervention. Multi-agent architectures create new failure modes, and many teams still lack the tools to find issues early, understand why they happened, and repair them before damage spreads.

Ronitt Mehra, AI Engineer at Amneal Pharmaceuticals, builds directly for this challenge. His background includes applied research work at Columbia University and hands-on engineering experience at Qualcomm, giving him a practical view of what it takes to make complex systems reliable. Amneal is a publicly-traded pharma company with annualized revenue around $3 Billion, according to its earnings reports. It primarily deals in research and development of generics, but is also manufactures and distributes specialty drugs.

At a recent AI Agents Hackathon, an event funded by industry heavyweights like OpenAI and Anthropic, Mehra developed a framework that serves as a tangible example of how to design for AI resilience from the ground up. The applications of AI within a modern pharmaceutical company are numerous, from R&D, to manufacturing efficiency, to backend administration and clinician-facing applications. But complex implementation requires careful planning and auditing that cannot be bought off the shelf.

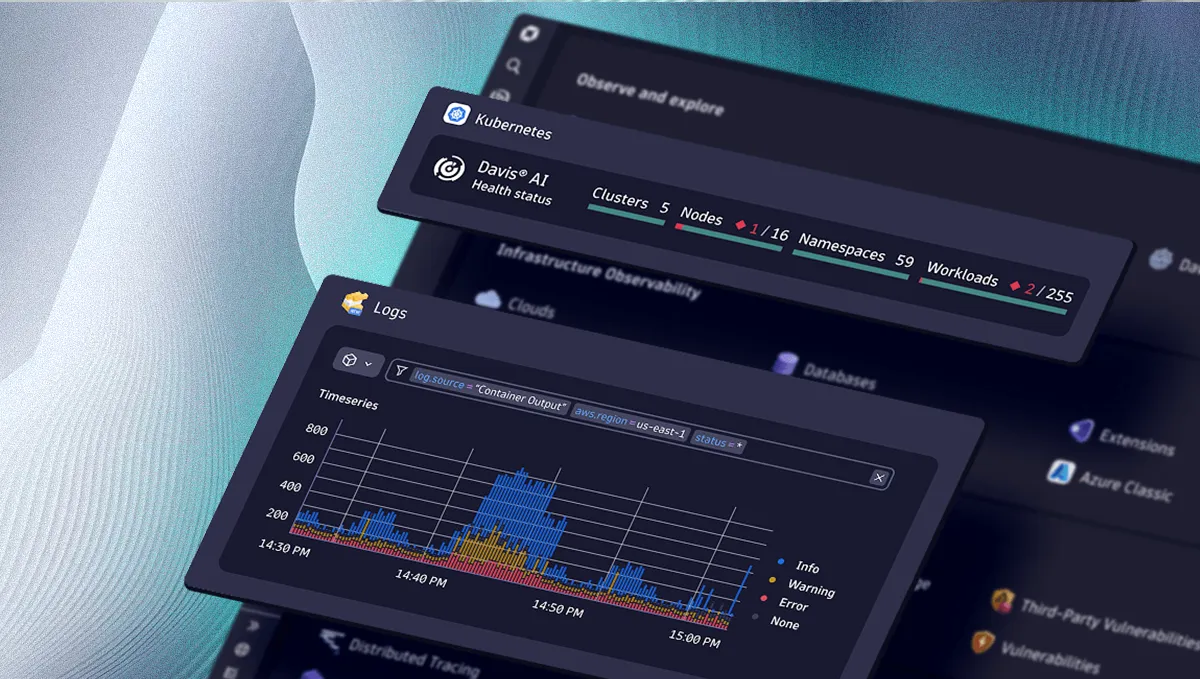

Mehra's project, CTA-ACT, is a multi-agent system where ingest, retrieval, and auditor agents work in concert to detect anomalies, analyze root causes, and autonomously remediate failures based on a "causal trust principle." Mehra explains that the business value lies in its ability to reduce the time and cost typically associated with debugging these tangled agentic systems.

The framework is the product of his 'pipeline-first' philosophy. The philosophy prioritizes production-readiness from the outset, where key parameters are treated as foundational requirements, not afterthoughts. "In launching from POC to production, the bottlenecks primarily exist in the production pipeline, not in the actual POC," says Mehra. "The goal is not just to create a POC, but to build it from the start with parameters like scalability, performance, latency, and throughput in mind."

Plan before the platform: Mehra's approach is shaped by his experience in a regulated pharmaceutical environment, where resource constraints and specialized data-handling requirements demand a different playbook. He notes that success in such settings is often tied to proactive decisions about infrastructure and tooling. "Before you even start to ideate on a POC, you should define what a suitable infrastructure will look like and create a plan for how you will scale it."

Stay in your lane: That structure, Mehra says, works in tandem with a workflow built on frequent, parallel communication. He argues this approach is key to maintaining an accurate view of the development lifecycle and helps all components work in sync. "We have separate teams for AWS infrastructure, data lake management, and AI solutions," he explains. "This task segregation is necessary as it not only increases your fault tolerance but also provides a more holistic perspective on the system development life cycle."

Parallel, not piecemeal: "All the verticals need to be worked on in parallel. It requires significant cross-functional collaboration," Mehra continues. "For instance, the AI team must collaborate with the infrastructure team to define server and port requirements. That can only happen when everyone is working in parallel."

All this work—on process, governance, and collaboration—ultimately centers on the question of trust. For Mehra, trust is a continuous process, achieved through a combination of post-deployment feedback, a disciplined production mindset, and a collaborative process that helps keep all teams working in sync.

Thinking like a regulator: "For governance, a feedback mechanism is essential because no project is perfect. Feedback is what helps you reiterate on and improve your project after deployment, using techniques like RLHF and RLAIF," Mehra says. His view is that trust is earned through continuous adjustment, not assumptions about how a system will behave at scale. "You need to have a compliance mindset when scaling your POC to production. This is especially true for security, where your application must be built to follow modern principles like zero-trust policies."

Mehra's perspective speaks to the common struggle of translating proofs-of-concept into robust production systems, reinforcing the idea that there is no one-size-fits-all solution for enterprise AI. "The structuring of teams depends on the kind of organization, its size, and its goals," Mehra concludes. "A huge tech firm is probably going to be organized a little differently from a mid-cap pharmaceutical like ours."