All articles

Data Cleaning ‘Janitorial Work’ is Key to Unlocking Life Sciences Breakthroughs

Ming "Tommy" Tang, Director of Bioinformatics at AstraZeneca, discusses data quality challenges in bioinformatics and how they stall scientific discovery.

Key Points

Ming "Tommy" Tang, Director of Bioinformatics at AstraZeneca, discusses data quality challenges in bioinformatics and how they stall scientific discovery.

As the primary cause, he points to a gap in data standards enforcement with massive consequences for the drug development pipeline.

Tang advocates for a "trust but verify" approach, where AI speeds up human analysis and collaboration instead of replacing the researchers who validate results.

Cleaning metadata is one of the most time-consuming parts of bioinformatics, especially when you download data from the public domain... we spend most of our time on this type of work: cleaning up data, combining different data sources into the same data frame, and matching sample names.

*The views and opinions expressed by Ming "Tommy" Tang are his own and do not necessarily represent those of his former or current employers.

The story of artificial intelligence in life sciences is one of limitless potential and automated discovery. But in reality, complex datasets expose just how far most organizations actually are from being AI-ready. In fields like bioinformatics, for instance, where scientists and clinicians use computational tools to analyze and interpret large volumes of biological data, experts say poor-quality data and inconsistent practices are holding scientific discovery back.

Director of Bioinformatics at AstraZeneca Ming "Tommy" Tang* says the promise of AI is fundamentally inseparable from the painstaking work of data preparation. Trained in a molecular cancer biology lab, Tang's "wow" moment came, ironically, not from a biological discovery, but from a 2GB data file that exceeded Excel's processing capacity. Looking back, he says, this technical hurdle is what forced him to learn the coding skills that would eventually define the rest of his career. Eventually, he worked his way through leading institutions like MD Anderson Cancer Center and Dana-Farber Cancer Institute, where he led a bioinformatics team for the NCI Cancer Moonshot’s Cancer Immunologic Data Commons (CIDC) initiative. Today, he serves as a scientific advisor for companies like Pythia Biosciences to help develop multi-omic data analysis software.

The janitor's to-do list: According to Tang, the greatest near-term potential of AI lies in automating routine tasks like code generation, report writing, and improving clinical trial logistics. Likening the tedious, foundational tasks that precede analysis to 'janitorial work,' he describes the unglamorous yet essential duties that consume most of a bioinformatician's time. "Cleaning metadata is one of the most time-consuming parts of bioinformatics, especially when you download data from the public domain. The metadata is usually messy, which means you need to read the corresponding paper to understand the experimental design, download the spreadsheet, and then extract the useful information," Tang explains. "In reality, we spend most of our time on this type of work: cleaning up data, combining different data sources into the same data frame, and matching sample names."

But even with AI to increase efficiency, Tang says, strong programming skills are still required for accurate verification. Because AI-generated code can yield incorrect results, human oversight is still necessary for now. Fortunately, verification is already a formal process in the high-stakes world of scientific discovery. For Tang, it’s a good reminder that the human element must stay central, even as automation speeds up analysis. In his view, AI is a tool to accelerate the cycle of human collaboration, just like any other. "It cannot replace you. We still need humans in the loop to understand the data analysis," he says.

More data, more problems: "The notion that bioinformatics involves straightforward 'press button' analysis is misleading," Tang says. In reality, it requires extensive data cleaning and exploratory analysis. "You can't just throw a ton of bad data into any old AI algorithm and expect magic." As an example, Tang points to 'batch effects,' or subtle variations that arise when experiments are run on different days. "AI models can create misleading results because they can’t distinguish between biological signals and technical artifacts," Tang says. Human-led exploratory data analysis is now a non-negotiable prerequisite, as a result.

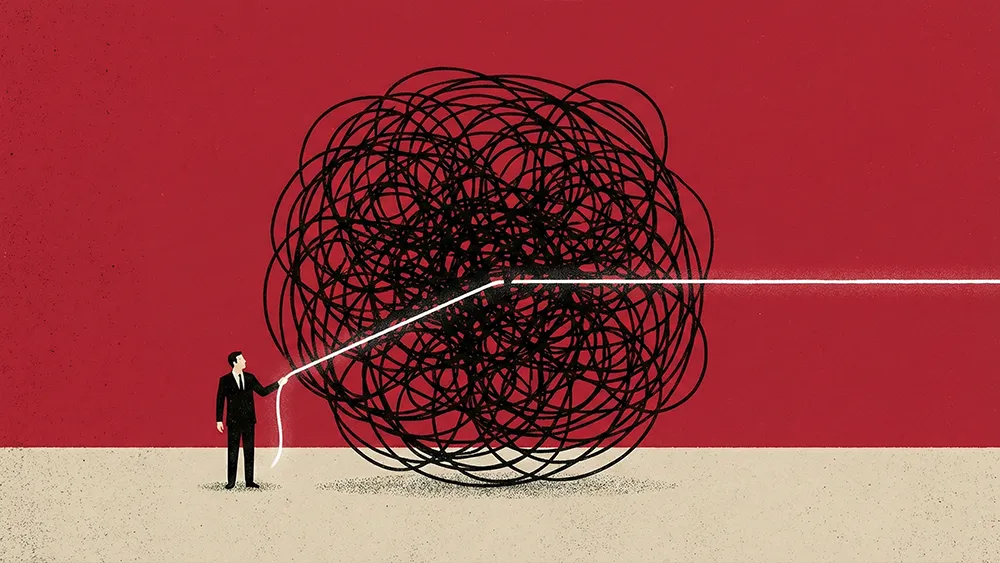

The enforcement gap: Tang also notes that industry standards already exist to solve the problem. "Everybody knows the FAIR principles for scientific data: Findable, Accessible, Interoperable, and Reproducible. But in reality, getting people to follow those rules, actually enforcing them, is still a big challenge."

The ten-year bottleneck: Unfortunately, this gap can have real-world consequences in bioinformatics, which Tang often discusses publicly on platforms like X. Here, he connects the messy data problem directly to one of the biggest roadblocks in his industry: drug development. "The clinical trial process is a bottleneck for sure. Each phase can take a couple of years: Phase 1 can take two years, Phase 2 can take three years, and Phase 3 can take another three years. That's already almost 10 years." Meanwhile, most drugs still fail because of what Tang says is an all-too-familiar problem. "For an AI algorithm to be able to predict toxicity, you need to have enough data for it to train on first. We simply lack the high-quality human toxicity data needed to train models that can predict outcomes accurately. That's the problem."

However uncertain, the path forward is not to fear AI, Tang insists, but to embrace it with a healthy dose of skepticism. Instead of outright rejection, he offers a simple but powerful mantra for critical thinkers in the age of automated science: "Trust but verify. No matter what output AI gives you, always attempt to verify it with your own expertise."