All articles

Managing AI In An Era Of Compute Scarcity: Governance Takes Center Stage

Palanivel Rajan Mylsamy, Director of Engineering Program Management at Cisco, shows how compute scarcity and strong governance now define whether enterprise AI can scale.

Key Points

AI workloads now strain limited compute capacity, forcing enterprises to focus on what is practical and scalable instead of what is theoretically possible.

Palanivel Rajan Mylsamy, Director of Engineering Program Management at Cisco, shows how governance keeps AI efforts from drifting into wasteful or low-impact work.

He outlines a path that uses intelligent routing, predictive visibility, and tiered infrastructure choices to direct compute power toward the work that delivers real value.

To avoid the AI theater trap, we need real governance. A strong governance model lets us guide and redirect analysis to the right models so we don’t drain the power and capacity we have.

The measure of AI success is no longer just the power of the model, but the strength of the foundational architecture that supports it. As AI's analytical demands grow, compute power has become an increasingly scarce and valuable resource. Leaders are forced to balance the promise of innovation with the hard constraints of cost, capacity, and governance, shifting the central question of AI transformation from simply what's possible to what's practical, scalable, and operationally sound.

Palanivel Rajan Mylsamy, Director of Engineering Program Management at Cisco, has spent years navigating the real pressures of running AI at scale. His work blends operational discipline with technical depth, giving him a sharp sense of where architecture must hold steady and where governance keeps projects from slipping into AI theater. With experience guiding hybrid methodologies across global teams, he focuses on building systems that last. For him, the antidote to the resource crunch is straightforward: put governance at the center.

"To avoid the AI theater trap, we need real governance. This is where my role in business operations and program management becomes essential. A strong governance model lets us guide and redirect analysis to the right models so we don’t drain the power and capacity we have," says Mylsamy. That discipline shapes how he approaches resource allocation in the AI era, starting with what he sees as the industry’s most urgent constraint.

New age gold rush: "The primary resource bottleneck today isn't human resources. It's compute power. With access to so many powerful LLMs, compute is becoming like gold," Mylsamy explains. "The prompts we're seeing in the industry are going deeper and deeper, and the analysis required to provide the right data is becoming more intensive. This is driving a massive need for data center capacity and power."

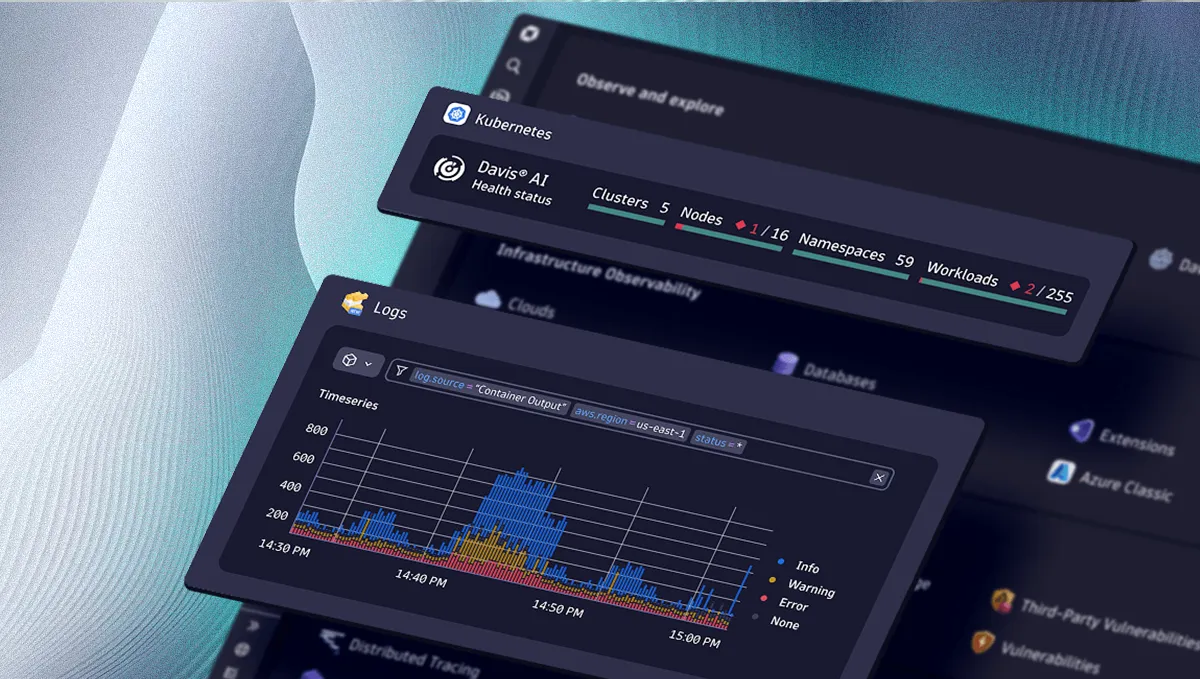

Intelligent routing: In framing governance as the key to ROI, Mylsamy stresses that in practice, this means adapting established industry frameworks like CMMI and using maturity models to route resources effectively. The goal is to intelligently channel compute power toward projects with the highest business impact. "We need to route lighter workloads the same way cloud systems manage capacity for disaster recovery and backups. That kind of intelligent routing lets us do more with less without overloading scarce compute resources."

Predictive visibility: He also uses AI to bring more clarity to large, fast-moving programs. "By structuring our data with AI, we get predictive program visibility. It lets us forecast slippages, dependency risks, and capacity overloads with much better accuracy," says Mylsamy. "Without that structure, you end up touching multiple areas at once instead of seeing the issues ahead of time."

For workload placement, Mylsamy advises leaders to tailor their approach to specific security, cost, or speed requirements, recommending a tiered strategy rather than a one-size-fits-all solution.

On-prem advantage: Different environments still have distinct roles, and Mylsamy sees on-prem infrastructure remaining essential in places where security trumps everything else. "The on-prem enterprise solutions are not going away. If we want to sell products to the federal market, for example, those systems must be highly secured," he says.

The best of both worlds: For teams balancing capability with budget, he points to hybrid models that keep automation local while shifting supporting functions to the cloud. This preserves performance without burning through expensive cloud compute. "Customers looking for capability with minimal infrastructure cost can pivot to a hybrid cloud. They can keep an automation stack running on their on-prem hardware, but move the assurance, visibility, and monitoring functions to the cloud," Mylsamy outlines. "This prevents them from using costly cloud resources for the automation itself and has a direct, positive impact on their budget."

One-click cloud: When issues arise, cloud environments often offer the fastest path to resolution, especially for customers who prioritize agility. "The trade-off becomes clear when a customer has an issue," he continues. "With an on-prem solution, I need to go through a phased migration window and provide a hotfix, which involves some hand-holding. With the cloud, however, we can resolve the issue for them in a single click."

Leaders often face the challenge of navigating the path from a successful pilot to a full-scale deployment. Mylsamy notes that successful teams fall back on a systematic workflow to decide the path forward, treating re-evaluation not as a failure but as a core function.

Sanity check: "We constantly bring our teams to the whiteboard to perform a sanity check and ensure the solutions we're building are still relevant," he says. From there, governance guides whether a new requirement needs a deeper architectural change or just an adjustment at the application layer, which keeps the foundation stable while teams move forward. He adds that scale only comes after the core use case proves itself, with enterprise-readiness layers added in phases instead of all at once.

While he advocates for flexibility, Mylsamy holds that scale depends on a solid foundation. "When it comes to scale, if an issue is architectural, you can't just put a wrapper around it. The solution must be architecturally supported," he concludes. A strong base creates a lasting fix and lets teams focus on building real capability instead of layering patch after patch.