All articles

How Managing Datasets Instead of Storage is Becoming the Deciding Factor for AI Success

Chadd Kenney, VP of Product Management at Pure Storage, lays out why AI only works at scale when enterprises move beyond storage and manage datasets with context, policy, and control.

Key Points

AI initiatives stall because data lives in fragmented systems without context or lineage, forcing teams to spend most of their time just figuring out what data exists before anything reaches production.

Chadd Kenney, VP of Product Management at Pure Storage, frames dataset management as the missing infrastructure layer that replaces storage-centric thinking and restores trust in data.

By managing datasets with embedded policy, lineage, and performance guarantees on a unified platform, organizations reduce risk, automate governance, and enable AI systems to operate reliably at scale.

We need to stop thinking of a dataset as just a thing in a storage device. It is its own living thing. It has policy, context, and a known lineage.

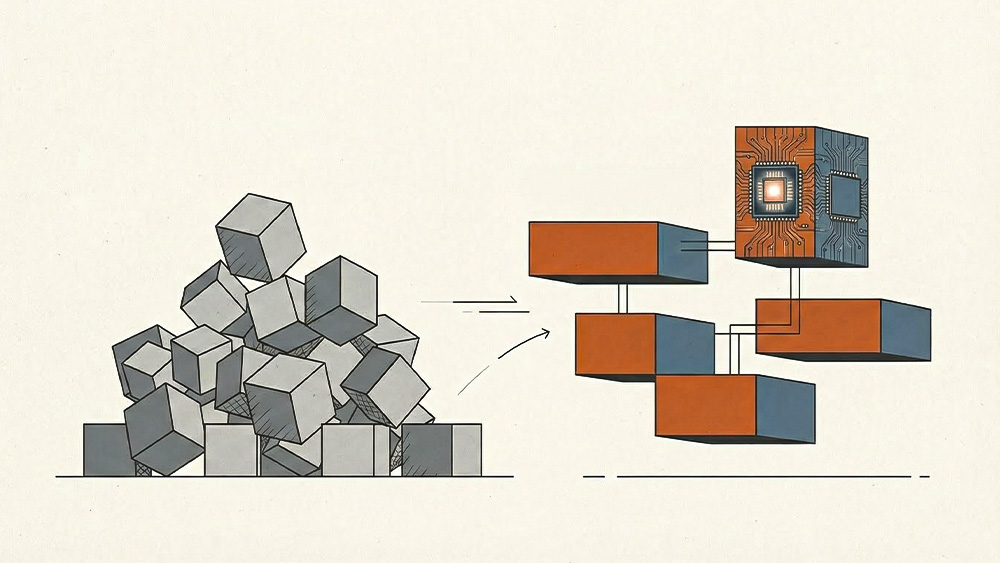

AI has outgrown the way enterprises think about data. Storage-centric models built around volumes and file systems no longer reflect how modern AI systems find and use information. When data sits in isolated stacks without shared context or lineage, reliability breaks down before AI ever reaches production. Delivering governed, trustworthy AI now depends on replacing storage management with something more fundamental: managing datasets as first-class infrastructure.

Chadd Kenney is Vice President of Product Management at Pure Storage and a founding member of its technical team, with a career spent building and scaling data platforms at companies including Clumio and Bluesky. Having led products through periods of rapid growth and acquisition, he has seen firsthand where traditional infrastructure breaks under modern demands. Kenney argues that for businesses to survive and thrive in the age of AI, they must stop treating data as a byproduct of systems and start treating it as a core asset with purpose, ownership, and intent.

"Data isn't an artifact of the creation of information. It is the lifeblood of what businesses require to make fundamental decisions," says Kenney. The change starts by confronting one of the primary operational challenges in many AI projects: simply figuring out what data exists.

Digital archaeology: The problem is that most organizations are sitting on a goldmine of information they can't use because it's scattered across countless systems, trapped in poorly named containers, and lacks coherent context or ownership. "Most of the time spent getting an AI project up and running is just figuring out what data you have and getting it ready for training or RAG. The first big challenge is that most organizations don’t even realize what they have. It’s a sprawl of file systems, volumes, and buckets, scattered everywhere and named things like LUN 742673. The person who created them may be gone, and whoever came next named things completely differently. The first real step is getting operational control," explains Kenney.

The dataset as organism: The solution, then, involves a deep cultural change in how data is managed and owned. While the industry often calls this "data as a product," Kenney offers a sharper definition, preferring to think of the new model as creating a "construct" or a wrapper around the data that gives it life. "We need to stop thinking of a dataset as just a thing in a storage device," he says. "It is its own living thing. It has policy, context, and a known lineage. I know where it came from, where it was generated, and the intent behind it, which allows me to make good decisions instead of just guessing."

The technical foundation that makes this possible is a unified infrastructure where systems share a common language. At Pure Storage, this is enabled through Pure Fusion, which connects systems so they can intercommunicate and operate as a single, coordinated environment rather than isolated parts.

A world without traffic: "Think of it like cars on the road. Right now, we don't all speak the same language. The only communication we have is a horn, some hand signals, and a blinker. But imagine a future where all cars are autonomous and they all intercommunicate," says Kenney. "There will never be an accident, because no car will move into another's space. You'll have no traffic because they merge perfectly. The efficiency gains that come with that level of unification are huge."

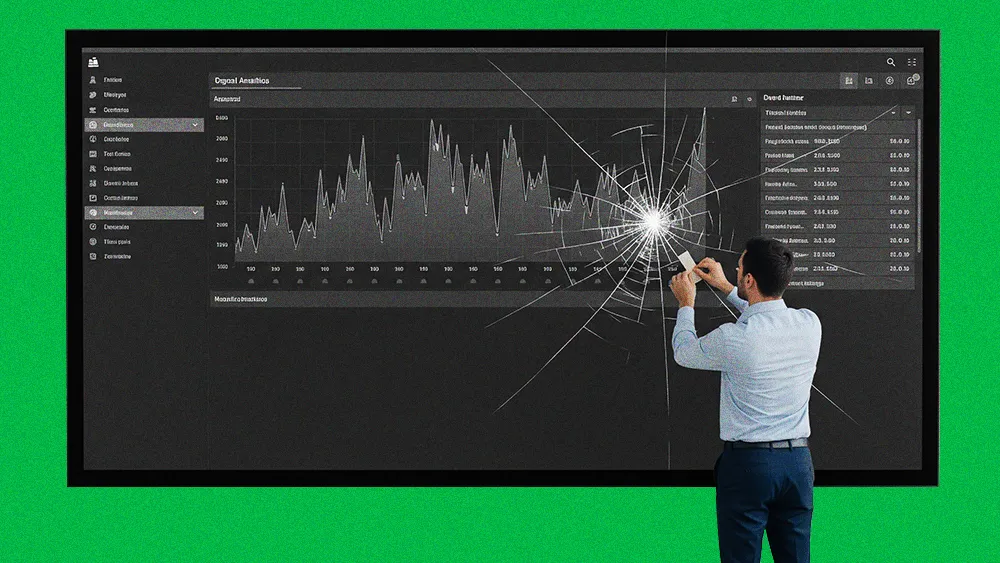

Copy chaos: The model earns its value by eliminating dataset sprawl before it turns into risk. Kenney says most breaches don’t start with production systems but with forgotten copies no one is monitoring. "Exfiltration attacks usually happen on a copy of data someone created and forgot about," he explains. "With automated policy, no copy of production data is allowed to live past 30 days. The system removes it automatically, and because policy and lineage apply to every copy, you gain control over the dataset itself."

Policy under pressure: The value of the model becomes clearest under pressure, when manual processes tend to fail. Kenney describes a ransomware scenario where context-aware enforcement turns a potential disaster into an automated fix. "If production data is set to a thirty-day recovery policy, but someone accidentally updated it to seven-days, enforced controls would catch this, and in the event an attack happened on day eights, the policy enforcement would have saved the day allowing the organization to recover," he says. "With enforced controls, the system updates everything automatically. Protection is restored without anyone touching individual systems, cutting both risk and operational effort at the same time."

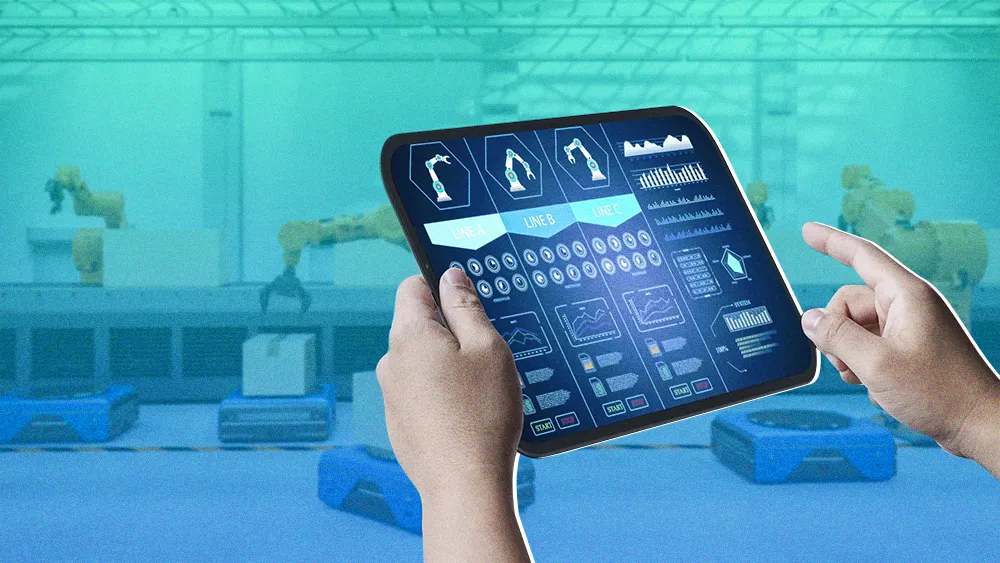

That same principle of intercommunication extends to performance itself. In a unified system, rebalancing allows workloads to meet their promised SLAs by drawing from a shared global pool of resources. Instead of static allocations and manual tuning, the infrastructure continuously adjusts in real time, moving workloads where they need to be to maintain performance guarantees as conditions change.

For Kenney, this is what makes AI-driven automation viable at scale. When context, policy, lineage, and performance guarantees are embedded directly into datasets, systems can stop reacting and start making informed decisions on their own. "Once the data understands itself, orchestration becomes possible,” he concludes. "That’s when copilots, agents, and model context protocols can actually make reliable decisions, because the data they’re acting on is governed, understood, and guaranteed."