All articles

Enterprise-Ready, Not Just AI-Ready is the New Mandate for Success

Kaycee Lai, VP of Enterprise AI and Analytics at Pure Storage, discusses how collapsing manual data workflows is essential for real-time, AI-driven decision-making.

Key Points

Enterprises rush to deploy AI, but fragmented data, legacy silos, and slow human-driven workflows turn AI speed into a liability, breaking trust when answers are slow, wrong, or inconsistent.

Kaycee Lai, VP of AI & Analytics at Pure Storage, explains that AI isn’t just another workload but a force that exposes long-hidden data, governance, and infrastructure flaws at real-time scale.

The path forward is to treat AI as a tier-one enterprise workload by unifying data access, automating AI-ready pipelines, and enforcing existing standards for governance, security, performance, and availability from day one.

AI is forcing organizations to confront years of fragmented data and infrastructure decisions that were manageable before but are unsustainable now.

For many enterprises racing to adopt AI, the biggest hurdle isn’t the sophistication of the algorithm or the power of the model. It’s the readiness of the underlying data. The rise of agentic, GenAI, and other AI or automation use cases simply exposes years of accumulated technical debt, making the creation of AI-ready datasets a primary bottleneck—a challenge that puts AI projects at risk, and stresses data governance policies in ways older workloads never did.

We spoke with Kaycee Lai, the Vice President of Artificial Intelligence & Analytics at Pure Storage, where he defines the strategy for Enterprise AI. A member of the Forbes Technology Council who pioneered the concept of the Data Fabric as the Founder of Promethium, Lai has a two-decade track record of leading companies like Hitachi Vantara and VMware through growth and successful acquisitions, holding multiple patents in AI along the way. He frames the problem in stark terms.

For decades, enterprises optimized systems for isolated workloads, slow-moving analytics projects, and human-mediated processes where delays, silos, and workarounds were the norm. "AI is forcing organizations to confront years of fragmented data and infrastructure decisions that were manageable before but are unsustainable now," says Lai. With AI demanding real-time access, consistency, and massive scale across the entire data footprint, the buffers that once hid these weaknesses and dependencies have disappeared, exposing just how brittle many legacy architectures have become.

No place to hide: The problem, Lai maintains, begins with a fundamental misunderstanding of what AI actually represents. "A lot of folks used to think AI was just another workload, like virtualization," he says. "What we’ve seen is that AI is the workload that exposes everything you weren’t ready for, from infrastructure to process, at a speed and scale we’ve never seen before." That shift is resetting user expectations inside the enterprise, creating what Lai calls a "pretty brutal" feedback loop where slow systems or inaccurate answers are immediately rejected, not tolerated or worked around.

Months to minutes: "For the longest time in data analytics, getting an answer from a data engineering team in three months was cause for celebration. AI has changed that. The demand is no longer one question every three months: it's three questions a minute." This shift has collapsed traditional data workflows built around slow, manual processes, forcing enterprises to rethink everything from pipeline automation to infrastructure and storage performance. What once was tolerated in a batch-driven world now becomes a massive constraint for real-time, AI-driven environments.

The emerging user paradigm, reprogrammed by AI to simply "ask the question," often collides with a legacy infrastructure built on silos. According to Lai, these silos were a deliberate, and unfortunately an unavoidable byproduct of past infrastructure constraints, which forced workloads to be separated across different protocols, such as block for structured data and file for unstructured data, creating a world "optimized for storing things, not for interaction." A logical business query, such as asking about product margins in Latin America cross-referenced with employee data, suddenly requires breaking a model built on the human-centric workflow of "ask Chris for finance, ask Margaret for HR." As a result, the enterprise scale demands of this new world are massive.

Lost in translation: Lai says the phenomenon of AI hallucination is a predictable garbage-in, garbage-out problem. He frames it as a data problem instead of a mysterious flaw in the model itself, stemming from a lack of business context. The AI does its best to infer meaning, but it can't know the tribal knowledge trapped within the enterprise. Even the most advanced AI can misinterpret data when context is missing or documentation is outdated. Lai explains, "An AI won't know that certain documentation is out of date, and should be ignored, or how to distinguish between a general request for revenue and the specific need for taxable revenue." The solution, Lai says, is rooted in taking a holistic view of AI readiness.

Shortcut syndrome: The approach begins with unified, AI-ready platforms that handle multiple protocols simultaneously. Such platforms allow companies to avoid what Lai describes as a common, and flawed, compromise: sacrificing enterprise-grade features for speed. "We spent decades establishing the need for resiliency, availability, and governance for our tier-one workloads. But then for AI, the thinking shifted to a narrow focus on making it fast, scalable, and cheap." This shift highlights a common misconception enterprises grapple with: AI being treated as a special, lightweight workload, even though it interacts with the same critical systems that require enterprise-grade reliability.

The race car in traffic: Lai warns that prioritizing speed over production-readiness means that many AI initiatives never reach real-world deployment, let alone scale, sharing, "This approach never made sense because it implies you're never going to put what you consume with AI into production. It's like buying a race car and parking it in rush hour traffic."

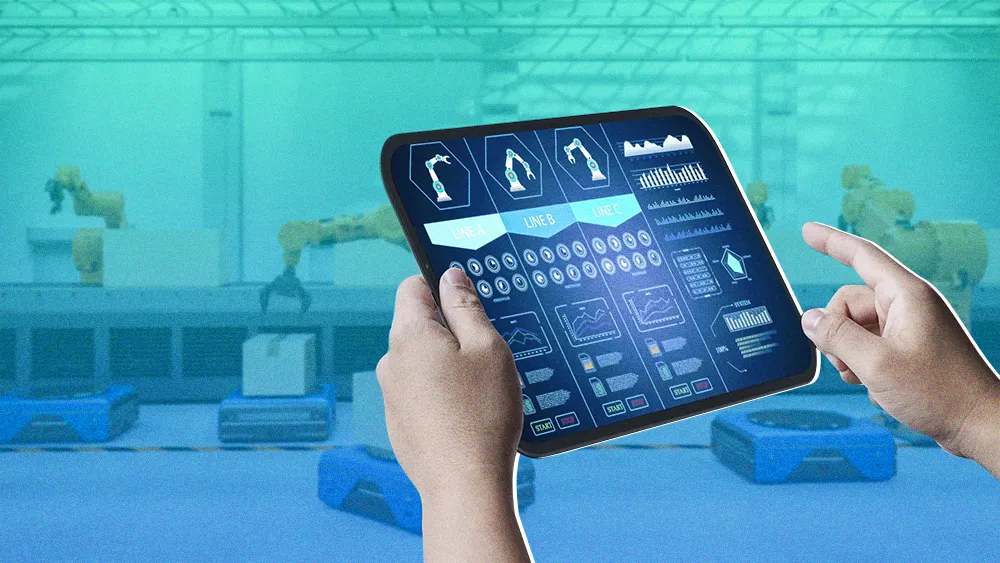

Beyond the technical challenges of the platform itself, Lai says that the human labor component and maintaining humans-in-the-loop presents an even greater impediment. The chaotic, manual process of discovering, preparing, and indexing data is, according to Lai, where most projects stall. For the highly unpredictable and continuous nature of AI, foundational features like maintaining uptime during hardware maintenance have evolved from a simple convenience into a key enabler for production workloads. Automating this intricate pipeline with solutions like DataStream, developed in conjunction with NVIDIA, is the key to achieving scale and compliance.

The human bottleneck: Lai firmly believes even the fastest GPUs and storage arrays can't make up for slow data preparation. In his view, "If you don't automate the human-labor component of the AI pipeline, your projects will stall because you're unable to consume with confidence." This is the simple reality enterprises are facing today: without AI-ready datasets, AI may produce outputs quickly, but the confidence, reliability, and production-readiness will continue to remain out of reach for organizations.

Lai provides a clear directive for CIOs, CAIOs, and data leaders alike, moving the focus from the technology itself toward foundational enterprise principles that enable enterprises to succeed. "AI-ready today does not equate to enterprise-ready," he says. "We need to flip that script and impose our existing enterprise standards for governance, security, performance, scale, and availability on AI, not compromise them for AI." While some compromises might occur in the pilot stage, it's the very reason most AI projects fail. "If you want to accelerate innovation, don't make enterprise readiness the last thing you think about. Make it the first," concludes Lai.